Will Overuse of AI Cause Cognitive Debt for Learners?

There is no shortage of jargon in the field of AI. New terms, acronyms, and phrases quickly migrate from the technosphere to common parlance in a matter of weeks.

Examples include “Large-Language Models,” “chatbots,” “prompt engineering,” “algorithms,” and “hallucinations.”

The phase that should draw the most attention from educators is “cognitive debt,” which can be described as “what happens when you rely too much on shortcuts - using AI tools or not thinking deeply about a problem - instead of putting in the mental effort yourself.” The idea is that while these shortcuts might make things easier or help you get things done faster in the short term, they can actually make it harder for you to learn, remember, and think critically in the long run.

Here is a synopsis of the thinking that commonly appears in the popular press: If a student always uses an LLM such as ChatGPT or Gemini to write essays instead of producing their own ideas, they might not develop the skills to think independently or critically. Over time, this “debt” builds up, and when they have to think for themselves, they might struggle more than if they had practiced doing it all along.

The issue gained even more attention in June with the release of an MIT research piece called Your Brain on ChatGPT: Accumulation of Cognitive Deb When Using an AI Assistant for Essay Writing Task. The student participants were divided into three groups: One group utilized ChatGPT for writing, another used Google for web search, and the third group used no resources at all.

The abstract tells a clear story: “Self-reported ownership of essays was the lowest in the Large-Language Model (LLM) group and the highest in the Brain-only group. LLM users also struggled to accurately quote their own work. While LLMs offer immediate convenience, our findings highlight potential cognitive costs. Over four months, LLM users consistently underperformed at neural, linguistic, and behavioral levels. These results raise concerns about the long-term educational implications of LLM reliance and underscore the need for deeper inquiry into AI's role in learning.”

We’ve Heard Similar Arguments Before

People in my age group are fond of beginning sentences with the phrase “I'm so old …” followed by a reference to how things were back in the good old days. This dynamic holds true in education, too, where teachers can cite a long list of technological advancements that robbed learners of their ability to think critically, remember, spell, and calculate.

The strict sisters at St. Anthony’s High School taught me to use a slide rule (look it up, youngsters) to complete equations in both my chemistry and calculus classes. Texas Instruments released the TI-30 in 1976, too late to do me any good. The outcry from teachers was immediate, claiming that the unfettered use of these machines would rob learners of their ability to manipulate numbers.

Although there has never been a study that showed a direct correlation between the ability to spell correctly and intelligence, that didn’t stop educators from decrying the arrival of spellcheck and auto-correct. Both WordPerfect and WordStar, long-ago ancestors of Word, Pages, and Google Docs, integrated spell check in the mid-1980s.

Microsoft introduced auto-correct to Word in 1993; we have suffered the textual consequences ever since. I have a theory that the contagion of mis-spellings has led to the growing popularity of the Scripps National Spelling Bee because viewers see this skill as akin to magic.

In the early 1990s, I explored the nascent Internet using one of the first web browsers (Mosaic) but it wasn’t until the 2008 release of Google Chrome that educators began to lament the irreparable harm this web browser would cause learners. Columbia University psychologist Betsy Sparrow produced a series of studies under the aegis of the “Google Effect,” which showed that when people know that information is readily accessible online they tend not to commit it to memory.

So yes, cognitive debt driven by technological advances has been a common complaint among educators for 50 years. As AI becomes more capable and widely used, the problem could worsen.

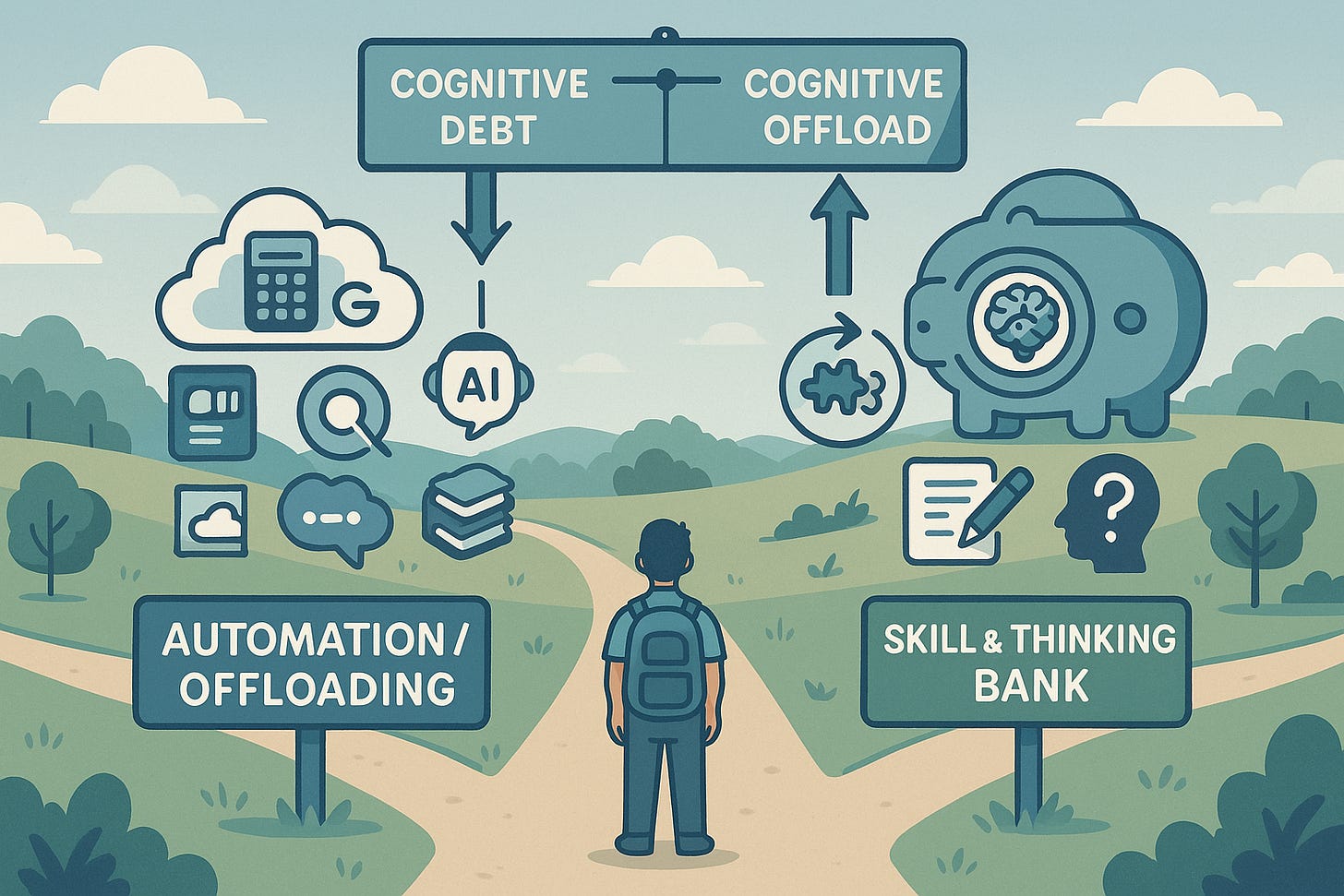

Cognitive Debt vs. Cognitive Offloading

I went to the doctor’s office the other day for a checkup, finished quickly, then scheduled a followup at the front desk. They asked me if I wanted a reminder card (made out of this thing called paper) for my next appointment. I told them I use a fancy tool called a mobile phone. That, my friends, is how cognitive offloading works.

More precisely, cognitive offloading is when you use tools or other outside help to take some of the mental work off your brain. This helps free your mind so you can focus on important matters and makes it easier to complete tasks without getting mentally tired.

In the classroom, the use of technology to assist cognitive offloading can lead to the primary outcome of modern instruction: Higher order thinking. By relying on computers for information storage (notes) students can focus on the upper levels of Bloom’s taxonomy: Creativity, evaluation, and synthesis.

Researchers, though, are trying to make a distinction between the use of simple technological tools for cognitive offloading with the use of AI-powered tools that further the decline of higher order thinking skills. Teachers can be a fan of cognitive offloading while deploring the development of cognitive debt.

So how do we do that?

Strategies to Combat Cognitive Debt

I did a lot of reading, thinking, and AI-powered research while trying to find classroom activities or strategies that would maintain the benefits of cognitive offloading while reducing the development of cognitive debt. This is a short list of what I came up with:

1. AI as a Drafting Partner, Not a Final Author

Activity Process: Students use an LLM to generate an outline or rough first draft based on their own prompt. Then, students annotate the draft, identifying weak logic, generic phrasing, or surface-level content. Their final product must include a reflection explaining which parts were edited, replaced, or expanded, and why.

2. Double Response Protocol: Human First, AI Second

Activity Process: For any complex question (e.g., historical analysis, math word problem, ethical debate), students first write their own answer or plan. Then they use an AI tool to generate a second version. Students compare and analyze discrepancies, improvements, or flaws in both responses and write a meta-analysis.

3. Source Check Challenge

Activity Process: After generating content with an AI tool, students must verify three key claims using academic or credible sources. They note whether the AI’s response was accurate, misleading, or incorrect and then revise accordingly. Extension: Introduce an AI-generated bibliography and have students audit it for accuracy.

4. Prompt Engineering for Higher-Order Thinking

Activity Process: Teach students how to use advanced prompt strategies: “Compare and contrast,” “Design an argument,” “Summarize then critique,” etc. Students iterate on prompts to get deeper or more nuanced responses. They submit a prompt-response map (download here), showing how different prompts led to different types of thinking.

5. Co-Authoring with AI: Who Contributed What?

Activity Process: In group projects or essays, students must use AI as a collaborator, clearly labeling what AI generated and what they wrote. A final presentation must explain how AI helped and how they made the final decisions, edits, and synthesis.

Educators can’t afford to ignore the risks of cognitive debit. New research continues to remind us this problem is growing. Cognitive debt that is incurred in the school years will resound across the decades as students take increasingly important roles in community and industry. Once again, we must be proactive in teaching skills that increase human capacity, not reduce it. Have you ever watched the Disney film WALL-E? That is not a future I want for our kids.